Introducing dag-factory

Apache Airflow is “a platform to programmatically author, schedule, and monitor workflows.” And it is currently having its moment. At DataEngConf NYC 2018, it seemed like every other talk was either about or mentioned Airflow. There have also been countless blog posts about how different companies are using the tool and it even has a podcast!

A major use case for Airflow seems to be ETL or ELT or ETTL or whatever acronym we are using today for moving data in batches from production systems to data warehouses. This is a pattern that will typically be repeated in multiple pipelines. I recently gave a talk on how we are using Airflow at my day job and use YAML configs to make these repeated pipelines easy to write, update, and extend. This approach is inspired by Chris Riccomini’s seminal post on Airflow at WePay. Someone in the audience then asked “how are you going from YAML to Airflow DAGs?” My response was that we had a Python file that parsed the configs and generated the DAGs. This answer didn’t seem to satisfy the audience member or myself. This pattern is very common in talks or posts about Airflow, but it seems like we are all writing the same logic independently. Thus the idea for dag-factory was born.

The dag-factory library makes it easy to create DAGs from YAML configuration by following a few steps. First, install dag-factory into your Airflow environment:

pip install dag-factory

Next create a YAML config in a place accessible to Airflow like this:

example_dag1:

default_args:

owner: 'example_owner'

start_date: 2018-01-01

schedule_interval: '0 3 * * *'

description: 'this is an example dag!'

tasks:

task_1:

operator: airflow.operators.bash_operator.BashOperator

bash_command: 'echo 1'

task_2:

operator: airflow.operators.bash_operator.BashOperator

bash_command: 'echo 2'

dependencies: [task_1]

task_3:

operator: airflow.operators.bash_operator.BashOperator

bash_command: 'echo 3'

dependencies: [task_1]

Then create a .py file in your Airflow DAGs folder like this:

from airflow import DAG

import dagfactory

dag_factory = dagfactory.DagFactory("/path/to/dags/config_file.yml")

dag_factory.generate_dags(globals())

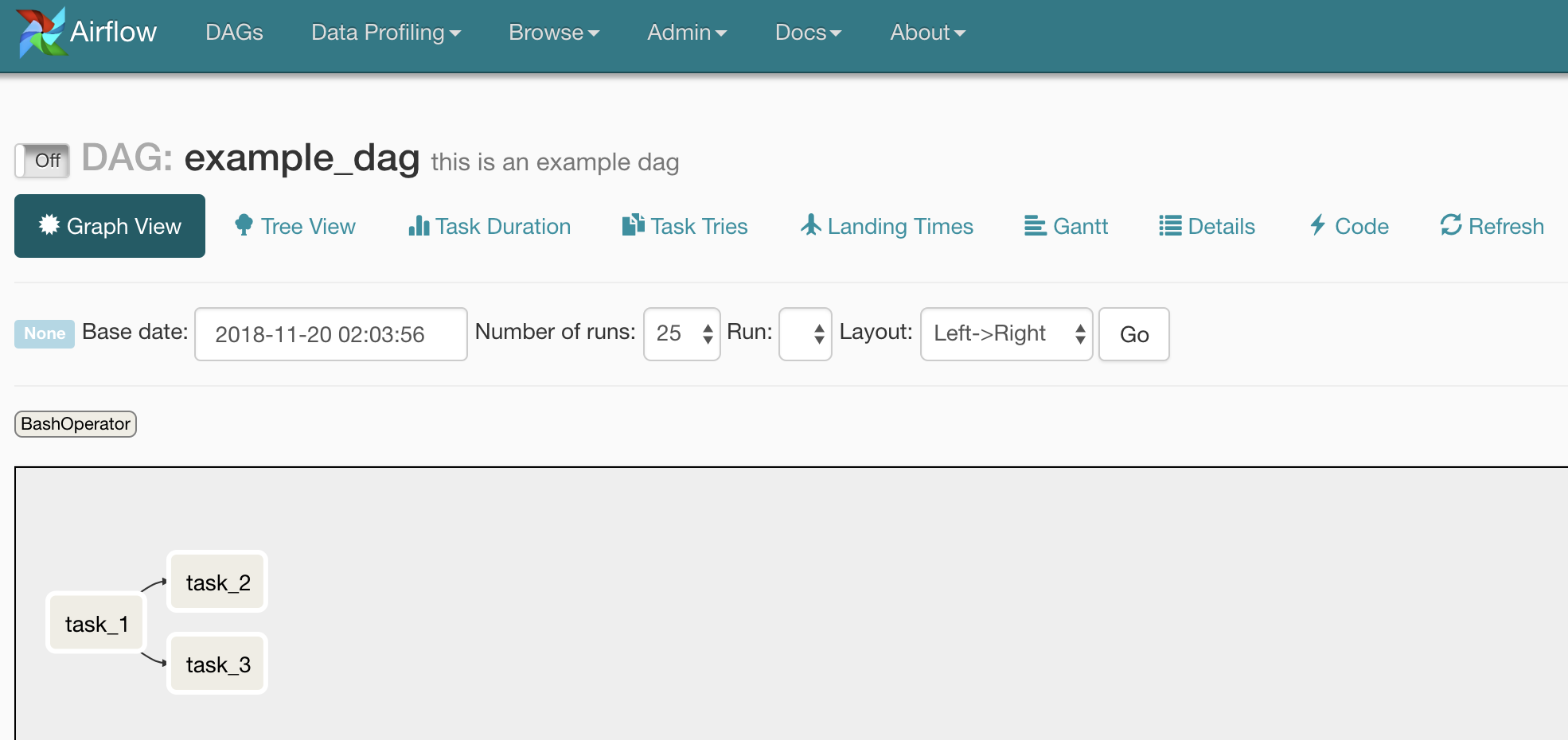

And :bam: you’ll have a DAG running in Airflow that looks like this:

This approach offers a number of benefits including that Airflow DAGs can be created with no Python knowledge. This opens the platform to non-engineers on your team, which can be a productivity boost to both your organization and data platform.

dag-factory also allows engineers who do not regularly work with Airflow to create DAGs. These people frequently want to use the great features of Airflow (monitoring, retries, alerting, etc.), but learning about Hooks and Operators are outside the scope of their day-to-day jobs. Instead of having to read the docs (ewwww) to learn these primitives, they can create YAML configs just as easily as the cron job (ewwwwwwww) they were going to use. This will reduce the time spent onboarding new teams to Airflow dramatically.

dag-factory is a brand new project, so if you try it and have any suggestions or issues let me know! Similar tools include boundary-layer from etsy and airconditioner (great name!) from wooga, so check them out too!